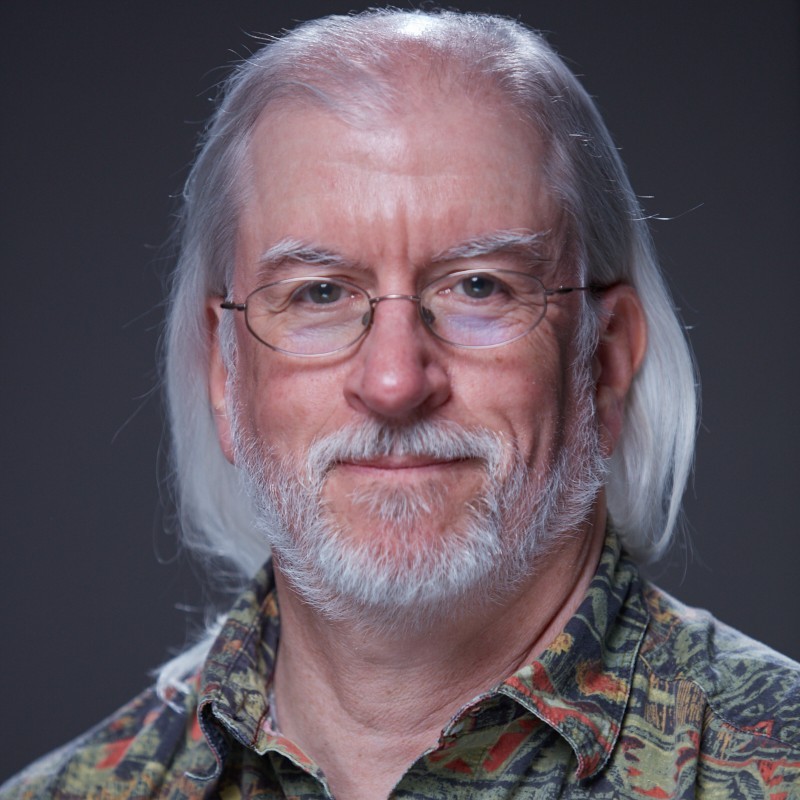

[00:00:00] Speaker A: Foreign welcome to this episode of our show, True DataOps. I'm your host, Kent Graziano, the data Warrior. In each episode, we're going to bring you a podcast discussing the world of DataOps with the people that are making DataOps what it is today. Now be sure to look up and subscribe to the DataOps Live YouTube channel where you will find all the recordings from our past episodes. If you missed any of the prior episodes, now is your chance to catch up. Better yet, go to truedataops.org and subscribe for the podcast now. My guest today is industry expert, analyst, author, speaker and world traveler Wayne Eckerson. He's a well known data strategy consultant and he's president of Eckerson Group. Welcome back to the show, Wayne.

[00:00:49] Speaker B: It's a pleasure to be here, Kent.

[00:00:52] Speaker A: Yeah, you were actually you were my first guest on season one, episode one. So it's been a little while since we've had you, had you back on the show. So glad, glad we were able to get you on this time.

[00:01:06] Speaker B: Well, a lot's changed since then, so a lot to discuss.

[00:01:09] Speaker A: Absolutely. So for the folks who don't know you very well, can you give us a little bit of your background in data management?

[00:01:18] Speaker B: Yeah, believe it or not, I've been doing data and analytics in one shape form or another for 30 plus years.

Started back in the early 90s as a research analyst at Patty Siebel Group, then joined the Data warehousing institute for 12 years and now have my own company, Eckerson Group, which is a consulting firm that helps organizations develop data strategies for data governance, data management, data architectures and self service analytics, among other things.

[00:01:55] Speaker A: Yeah, I think it was probably when you were at TDWI that we first met years ago.

[00:02:01] Speaker B: Yeah.

[00:02:01] Speaker A: And you were webinars and things you used to do for them and the white papers you wrote back in the day before all this cloud stuff hit us.

[00:02:10] Speaker B: That's right. And you were my deep throat connection at Snowflake.

[00:02:15] Speaker A: Yes, I was.

So now as we, you and I have discussed, you know, this season we're going to kind of take a step back and think about the world of true data ops and how it's evolved and what we've learned over the last couple of years. So you were one of the contributors to the development of this philosophy and the concepts of true data ops along with the seven pillars. So that's one of the reasons I wanted to get you back on the show for sure.

For listeners, if you've not looked at the seven pillars or even Heard of them before. You can find

[email protected] seven pillars or you can scan the QR code that's on screen right now and that'll get you caught up.

So it's been four years, basically. Kind of surprising when we looked at it. It's four years since we published truedataops.org the website and then wrote the Dummies Guide to Data Ops. So yeah, in that time. I guess the question now is, do you think that the seven pillars of true Data Ops and the underlying philosophy that we all authored, does that still resonate in the industry?

[00:03:29] Speaker B: Yeah, you know, it does. It still resonates. I spent some time reviewing them today and they all strike home.

I think, you know, the, the core motivation is still there.

Software engineering is more advanced than data engineering and we see in comparison that we in the data side were more artisanal, you know, you know, cowboys still reign supreme.

And to me, DataOps was always the thing that was going to get us from that artisanal craft, you know, where it took a real artist or one person to go build something to more industrialized delivery that can scale and deliver higher value with lower defects, faster. Right? Faster, better, cheaper. And so that's the promise of data ops. And I think that still holds true. I think all the pillars resonate deeply with me still. I think, though, things have changed since we formed those and you know, one is the advent of gen AI in a big way and the other is more acceptance of distributed data architectures.

[00:04:52] Speaker A: Right, yeah, true.

[00:04:53] Speaker B: And development of data products are now happening in a more decentralized fashion, giving rise to data mesh, data fabric. So, you know, we might want to talk about how those things are affecting the seven pillars. But you know, I think the pillars themselves are still very strong.

I'm also a very big believer in the automated testing, developing tests in development and then I think maybe we could say more explicitly reuse those tests in execution.

[00:05:26] Speaker A: Right, yeah.

[00:05:29] Speaker B: The other thing that changed is we're using AI to help automate testing too, but go ahead.

[00:05:35] Speaker A: Oh yeah, yeah. So that's the. Yeah, we have, we have the pillar on automated regression testing and monitoring and yeah, I think that, you know, the automation. We've now got a lot more opportunity, I guess, with, with AI and Genai to automate that. And it is definitely something that's been a very weak spot in the data world for the decades that we've been involved in it. Getting somebody to even test their ETL code back in the day before they tried to put it in production. They might have tested it once on a very small data set, very static data set in development. And then, okay, the classic you and I go back far enough. You remember the days when they just said, oh, it compiled.

And that used to be one of the markers that we had in software development is you'd write some code and it had to be run through a compiler, whether it was Fortran or COBOL or something. And it's like, well, if it compiled, then the compiler didn't find anything wrong with your code, but that didn't mean it actually did what it was supposed to do. And then you go, move it into production. It's like, okay, that wasn't. That wasn't really right because we didn't test it. And that carried over into the data world for sure. Like you said, it used to be very artisanal. And I think that's kind of where the role of a data architect, data warehouse, warehouse architect kind of came in. And technical leads. I know I played that role quite a bit of having to basically herd the cats in there and make sure that. Yeah. Did we actually test everything before we moved it to production? Are we moving the right version of the code to production? Because even what version is it? Do we have a version?

Is the data model up to date? Are we actually about to put the right code, build the right table structures even in production, let alone put the right ETL and transformation code in production and make sure it works? And there was not.

The discipline came down to whoever the lead was, if there was a lead, if there was somebody that was actually in charge. And I remember trying to, you know, having to keep all this stuff in my head. Right. And, you know, if we got lucky, we might have made some checklists and say, okay, do we actually get all this right? But that's the. Yeah, I guess that's where DataOps itself comes in, as. As opposed to DevOps, is that we're, you know, applying those principles and disciplines to the data world. Right?

[00:08:20] Speaker B: Yeah, yeah, yeah. It's. It's definitely taking what happened in software engineering very successfully and trying to apply it in the data world little harder in the data world because there's this extra variable called data that's really pretty slippery.

It doesn't. You can't just put it in your code repository.

But I think what you're saying was the whole artisanal thing, you know, it depended on the leader, and the leader had to have good guidelines and checklists. Right. And there are a lot of things to keep track of, well, you have a Data Ops platform, it takes a lot of the guesswork out of it. Right. There's still a discipline in using it.

[00:09:01] Speaker A: Right. You still have to use it.

Even if you've got a good tool, you still have to use the tool appropriately. Right. You know, just because you have a data modeling tool doesn't mean your design is good.

[00:09:14] Speaker B: Exactly.

[00:09:15] Speaker A: Right. You can still build a really badly, poorly designed, poorly performing data warehouse using a really good data modeling tool.

[00:09:24] Speaker B: Yeah. And that's why I think Data Ops is even more important in this new distributed world we're in, you know, or decentralized world, where, you know, what's the common denominator? If you have all these business domains with their own data engineers building out data products. Right.

How are you going to make sure that whatever they build is scalable, it's reliable, it's accessible, it's got the appropriate metadata and all those good things that make it usable?

Well, we're seeing that DataOps could be that lingua franca that ties everything together in a distributed environment. Right. Like one of Snowflake's big customers in DataOps Labs big customers is Roach Pharmaceuticals. And that's exactly how they're using Data Ops. Right.

[00:10:14] Speaker A: And they're doing Data Mesh.

[00:10:16] Speaker B: Yeah, yeah, exactly. So how do you, how do you do a data mesh? Well, turns out, which is a decentralized way of building data products, you have to have a scent, I think you have to have a centralized platform. You know, you need it, you need a snowflake that you can build things off centrally, centrally share data, but also every domain has its own area that they can use. And then, you know, you have a tool like Data Ops that provides the, the discipline and the mechanism for each domain to actually build reliable, scalable data products. Right, Right.

[00:10:53] Speaker A: Yeah. And I think that it's interesting because the, the original, I'll say, thesis on Data Mesh was that you didn't want a centralized platform. But I think, you know, you and I have seen this over the last couple of years with companies like Roche. It's, it's a lot easier if you have a centralized platform where you can still, like you said, each domain can have their own area and they can be isolated to that extent. But at the same time, one of the, again, the theories of Data Mesh was everything had to be shareable. You had to be able to publish it, it had to be discoverable, whatever the data product was. And the data sharing mechanisms and data marketplace mechanisms in Snowflake make that imminently easy. You don't have to go build something to make it happen, but you still have that. And this is where DataOps Live certainly comes in. You know, that central tool that everybody can use to apply some standard rules. Early on we talked in the data mesh debates, you know, do you need a, what do we call that? You know, it's, it was trying to eliminate central it, but at the same time we needed to have center of excellence somebody and Roche certainly did this where they had a little team that kind of built out some templates, set some standards and then use DataOps Live to kind of implement it. And then it's a lot easier to train the domain teams to be self sufficient.

And again that's the, I think one of the, one of the pillars of DataOps is self service and collaboration and that's really hard to do without some standards. And you know, a centralized tool and a centralized platform, it becomes a lot easier.

[00:12:57] Speaker B: Right? It's the paradox of Data Mesh in my opinion. I mean there are some purists that advocate that yeah, it goes away, the data warehouse goes away, everybody has their own systems and somehow it all magically comes together.

But it doesn't, we all know that. So the paradox is that to really decentralize product development you need a strong central platform, right, that everyone uses and shares. There might be some exceptions along the edges, but you have to have a strong central platform like a snowflake. DataOps live, I think Broach also had Immuta for data access management, Monte Carlo for data observability, and those tools all the groups used, they were free to use their own ETL tool, their own BI tools, but they all use that shared data platform and made it so much easier. And then they also had to have really strong governance around everything that they were doing.

[00:13:54] Speaker A: Right. I think that's another one of our seven pillars. There is the data governance and security because with especially I think going to distributed approach like, like a data mesh, you know, how do you make sure that people are, are building the data products to be sustainable and repeatable and shareable and discoverable if you don't have some rules around it and a way to enforce those rules. And that's where you know, a data ops platform definitely comes in handy there to help do that because that's something then you can deploy to the domain teams without them having to go build their own sort of data governance thing or just be doing, you know, checklists, right? And, and hoping that, you know, did, did we do the review meeting? Did everybody sign off and having Those kinds of questions. But having a platform then that can kind of enforce that a little bit is certainly very helpful.

[00:14:54] Speaker B: Yeah, yeah. Building your standards into a platform makes things so much easier for everybody.

[00:15:03] Speaker A: And I think also given the idea that again in a data mesh sort of approach that the teams are independent and self sufficient, if you're eliminating it, as it were, then what skills do the people in the domain teams need and how can we support them with things like templates and standards, you know, and tools to make it easier so they don't. So everybody doesn't have to be a data engineer in order to make this whole paradigm work.

[00:15:38] Speaker B: Right, right.

Yeah. DataOps live as part of this centralized platform to decentralized development is absolutely critical because it kind of builds the standards into the tools that people are using and ideally all those products align. Right. And that's where the people governance is so important because from a data consistency and alignment perspective, you don't want two products that are on the same subject, but they have conflicting definitions and levels of granularity and different definitions for metrics and things like that. So things have to line up. If you're going to have an enterprise view, which you would have to assume every organization lease it to CEO and CFO level wants that enterprise view, not just a kaleidoscope view where each domain has its own view of the world and nothing comes together. Yeah. So the governance is really important.

Now you mentioned something I want to depress you on. One of my quibbles with the data mesh methodology was okay, let's distribute development to the business domains. They'll create these data products and then what? How do people discover them? You mentioned the data marketplace. I've been pressing on that for the last two years thinking that's a real critical piece, not only of a data mesh, but for any organization, especially one that wants to communicate with external users but, but also, you know, share data more freely within its organization. That is a huge problem with a lot of the companies we consult with.

[00:17:21] Speaker A: Yeah. And you know, there's been, I think there's been a couple of answers to that. You know, for, on the discoverability side, the, I'll say the, the standard answer is data catalogs. Right. You got to be able to publish a data catalog in that uses business terminology and semantic models and taxonomies so that the business people can go search in there and find out does this data exist in the organization regardless of, in the case of a data mesh, which domain team might own that data. So at a minimum you have to have something like that.

But then the question comes up very quickly is like, well, okay, how do I get to it? Does a data catalog get me to the data in a form that I can consume? Which of course is kind of the definition of a data product.

And that's what I saw, what I like so much with Snowflake's approach.

I mean, initially, very early on in the Snowflake we just had data sharing, which meant you could create a database and allow other Snowflake accounts to see that database in a read only mode. And so then they just could query it like, like their own database. Right. And so you had that. So somehow you have to put all that together. And, and then when they came out with their, you know, the marketplace technology that gave you a place to publish it, and over the years they've made it a lot easier for, I'll say, a less technical person to publish that data product and with all sorts of metadata, you know, like you and I would always want to see, you know, things that are in English, actual descriptions, maybe a sample of the data set. So somebody can go to. This effectively becomes, you know, the app store for data, right?

[00:19:12] Speaker B: Yes, right.

[00:19:13] Speaker A: That they could go in and go through and see what's available in their region, see whether or not it was free, if it was going to cost them something. Now there's mechanism from do it internally within an organization, which is what Roche did, right. They, they were able to publish it internally. So different divisions of Roche can go look at basically the internal marketplace and say, yeah, I want, I need that data and be able to push a couple of buttons and now you've been granted access to that data, that you can now query it and join it to your own data.

I'll say at the pure data level that I see that. And now the next question ends up being from a data product perspective. In some cases they're actually, maybe they're apps, right. So Snowflake's got the native app thing going on now too. So those can now be published on a marketplace. You could actually build say a forecasting app based on some shared data and then publish that so that somebody else in the organization can go again into that app store for data and, and see, hey, there's a, there's a forecasting app, right? There's a revenue app. There's all these apps that somebody already built and it's all in Snowflake, all inside of Snowflake. So it's governed and secure and both sides know what's going on.

But yeah, I think one of the challenges to your point on the, on the data mesh thing though is you've produced a data product.

Now what I think that's, that's another question is how do you evolve that product? And I think that's where we get back into the data ops sort of thing is it's not, it very rarely will it be a one and done.

So how do you apply changes and enhancements and even bug fixes in a shared environment like that and make sure you don't break anything downstream? Where one of the consumers of your data product, you know, whatever it is they built on top of it, be it a data set or an app, all of a sudden they're getting different results.

So that's the product management comes in, right?

[00:21:29] Speaker B: Absolutely. Yeah, yeah. So that is the definition of product. It's not a one and done thing. Let's call that a data asset. Right. A data product you're going to continue to enhance, which means you need a process to enhance the code. And that's when you get into all kinds of issues if you don't have a real disciplined approach to it, which DataOps gives you.

[00:21:51] Speaker A: Right. And that's where you get the pillars on version control and modularity along with the automated regression testing. Right. If you've got that built in and you make a change to your data product, you should be able to automatically have those regression tests kicked off before you try to publish it. And that becomes part of the governance that, that's mandatory. It's like, okay, there's been a change, there was some check ins and checkouts and you know, we've got a branch and we want to now move that branch to the, to production. But first you have to go through and run these tests on it and make sure they all pass before you can get approval to actually put it into production. And of course you need to be sending notifications to the consumers as well that you changed it.

[00:22:38] Speaker B: Right, right, yeah. So they know that they're getting a different version of the product when they go to their data marketplace.

I did want to ask you one thing about the data marketplace. I've been pressing on this issue for two years and I started with the data catalog vendors, like, hey, you guys should be publishing this metadata in a marketplace so people can actually get the data.

Now a lot of them had some provisioning mechanisms where you could request permission and then they would get it, send it through a ticketing system and then they say yes, but then you'd have to go and actually find the Right Tool to access that, that data product. So it's one step removed from a marketplace, which is really a retail equivalent for shopping for data.

A whole different interface and experience and things like that. So I'm trying to figure out, well, where is the technology for these internal data marketplaces going to come from? Catalogs, data platforms like Snowflake, data pipeline vendors, because they're producing the, the end result of a pipeline is a product, right. Or, you know, the, the data commerce vendors that are already building marketplaces for, you know, external consumption. Or is it going to be DataOps live, right? You're kind of sitting right in the middle of everything.

[00:24:06] Speaker A: Well, yeah, and yeah, I think you know about the fact that Snowflake built an internal tool. I'm forgetting right now what they ended up calling it that they've done a couple of talks on now using DataOps Live for publishing all the demos to the sales engineers.

So they have over like near a thousand people worldwide now using this, where they go in and if they needed to do a demo for a customer about the new, one of the new Cortex functionalities, they can do a search, they can find it, they can click a button and it sets up the whole environment for them and lets them go do the demo.

So yeah, they've actually leveraged adopts Live to do that to build their, their own internal.

You know, I almost call. It's a, it's almost a product factory. Right. Like you mentioned that before, where they can, they can do that. So they can easily roll these things out, keep them updated as Snowf adds new features. You know, somebody can build the demo and then publish it. And now all the sales engineers all over the world have access to it and they don't have to set up, they don't have to download stuff onto their laptop while they're out in the field. They connect to their Snowflake account, they set it up, they push a couple buttons and the demo is ready to go.

And if they get halfway through the demo and the customer says, okay, great, great, great, let's go talk about something else. And then they go on to another customer. One of the challenges was always, where was I? Do I need to reset that demo? Well, now again, they just go to run it and it automatically checks and goes, oh yeah, you were halfway through. So if you're with the same customer, we can pick up where we left off. If you need a new version of the demo, you know, you push button and it just, you know, basically resets the whole thing for you and you don't have to really because that was a lot of work. The sales engineers that I work with, I mean they were constantly doing this. I, you know, early days I had to do it myself, you know, building some of these things and it was a lot of scripts and things to, to make this work and you had to have certain things down on your laptop and it didn't always work. And now it's, it's like a smooth, seamless operation.

Actually talked to the head of the, that group. We did this on the podcast last spring. Last spring we actually had a discussion about this.

So that's been good. And I think they did a demo of it at Big Data London even. So, yeah, you can build an environment now to make this easier. And so like you said, the question you have to the vendors is like, okay, where is this, where is this functionality? And I think that Snowflake has certainly built a model, a model approach of how it could look.

I know on the producer side it's really easy to go in and build a simple data product of, you know, just say it's a star schema that's, that's got sales analytics in it and actually get that and publish it through an interface that's very non technical. And then anyone can, that has access to the marketplace can see it and you can again, you can do it internal or it's just inside of your organization or it can be published externally to the Snowflake Marketplace.

It's, it's, to me is revolutionary and it is the answer to a lot of these questions that came up in data meshes. How do we do this?

[00:27:35] Speaker B: Yeah.

[00:27:36] Speaker A: Now if you don't want to use Snowflake and you're still using Teradata or Oracle or one of the other cloud vendors, or you're trying to build it yourself in AWS or on Azure or Google BigQuery. Okay. You've, you've got to figure out how to build a lot of that yourself. And some of the vendors, like you said, you know, the data catalog vendors, the observability vendors, a lot of the, the data engineering vendors that have lineage built in. Right. All those can be used in this. But again, now you're into the buy versus build question. Right. Even though you might be buying some tools to help it, you're still having to build the entire I'll say platform. Right.

Does that come up very often in your, in your consulting? Do you have these conversations about buy versus build with, with folks?

[00:28:33] Speaker B: Yes, all the time.

I, I, most of our clients are really still trying to get the basic fundamentals of data management and governance in place.

And even these are some very large brand name companies.

And we find that over time things tend to slip back into chaos. And then they're faced with a question, okay, we've got legacy technology.

How do we migrate this to something more modern? Is it worth the time and the effort and the money, Right, to do that? Do we build it ourselves?

Most of it is at this point for our clients. They're going to buy something or buy and heavily configure it usually is probably more likely what they do, but there's still just a ton of organizations out there who are still trying to get out of the blocks or they've now been saddled with what was really great technology 15 years ago and now is not so great because we can do so much more with the underlying platforms. Like a Snowflake, like a DataOps Live built into it to support our data environments as they exist. Like the old world was pretty much, you know, if you had a hammer, everything looks like a nail, right?

You gotta stick all your data into the warehouse, you gotta model it before anybody can query it. And we've discovered now that, I mean, that doesn't work all the time and maybe even not most of the time, it's just too slow. Right. To meet user needs. So you need to augment that with some other types of technologies to get data where it's at.

Oftentimes what I recommend, when companies have these monolithic legacy based data environments and they're stuck and you know the migration is going to take a long time, like you can build new apps one at a time on the new platform while you're running the old, but it's still going to take a long time.

We tend to recommend injecting some agility right at the outset to give users some relief.

Data Fabric is a data fabric tool is one way to do that.

It's a tool you can give to power users who are already assembling data from wherever. Now you can get a tool that actually really helps automate the discovery process and helps them find data, evaluate it, pull it down, munge it together and even visualize it.

Then on the casual user side, a tool like if they've got lots of BI tools and thousands of reports, a tool like a BI portal can give some immediate relief like a data fabric can to a power user. We tend to recommend those kinds of capabilities.

And that's mostly a buy type of a situation, right?

[00:31:57] Speaker A: Yeah, well, we could spend probably another hour talking about data fabric and everything else. But we gotta wrap up what's coming up next for you. You've got some, I think a webinar or two coming up.

[00:32:12] Speaker B: Yeah. So we publish content every other month on a single topic. We bring our archives together with partner information and we write some new content. So next month it's actually the topic is Data Fabric and Data Mesh, so I guess that's why it's top of mind.

We have a webinar coming up on that on October 31st on that topic and we'll produce a newsletter with links to all of our content on that topic the week before.

[00:32:44] Speaker A: All right. And there's a QR code there on the screen for folks. You can just scan that to get to that information about Wayne's next webinar. So what's the best way for folks to connect with you, Wayne?

[00:32:56] Speaker B: Sure. LinkedIn is always good. Or just my email, which is very simple. Wayneckerson.com awesome.

[00:33:06] Speaker A: Well, thank you so much for joining me today and your insights and discussion. And it's like at times there I think I wasn't sure who was interviewing who here. It was a great discussion. It's like the best interactive discussion I think I've had in quite a while. So it's good when you get to talk to someone that I've been working with for years and we can have this kind of discussion. So much has changed. There's so much to consider in the industry. So it's great catching up with you. Thanks for everybody who's online has joined us. Be sure to join me again in two weeks when my guest is going to be a long time colleague Kevin Baer, who was one of the early sales engineers at Snowflake and now he's the VP of Data Cloud Architecture at Snowflake. So I think that's going to be a really good conversation with Kevin in two weeks. So as always, be sure to like the replays from today's show. Tell your friends about the True Data Ops podcast and don't forget to go to truedataops.org and subscribe to the podcast. You don't miss any of my future episodes.

Until next time, this is Kent Graziano, the Data Warrior signing off for now. See ya.