Episode Transcript

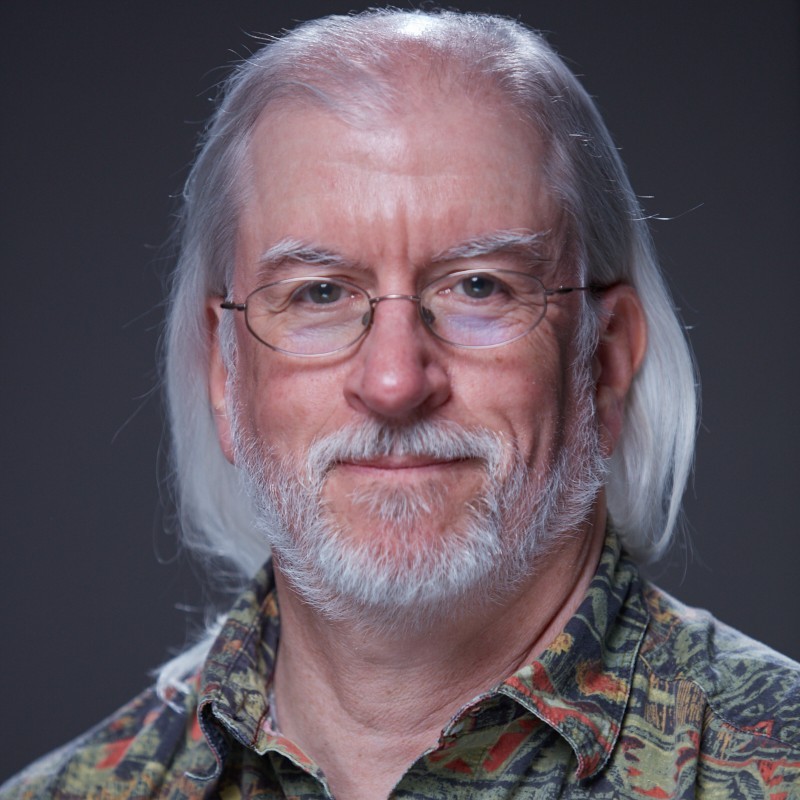

[00:00:00] Speaker A: Superhero. Each episode this year we are exploring how DataOps is transforming the way organizations deliver trusted and governed AI ready data. And if you've missed any of our past episodes, you can always go on to our data live YouTube channel. Subscribe and be notified for any upcoming episode today. I'm really excited. I have my guest today, Ronald Steelman, who is the author Mastering Snowflake Data Ops with DataOps Live and mastering Snowflake SQL API. Welcome to the show, Ronnie.

[00:00:30] Speaker B: Hey Keith. Great to be here.

[00:00:32] Speaker A: Yes, I'm excited.

A few months back I did get to see that you have this new book and I'm really looking forward to getting that a little bit deeper into that with you. But before that, why don't you give everybody a little bit of a background on who you are and your experience in the industry.

[00:00:50] Speaker B: Yeah, definitely.

So, Ronnie Stillman I've been working with data analytics AI for going on 21 now.

Really? This all started as I was in consulting back in 2018 and got introduced to Snowflake. And at the time we were a Salesforce consulting firm and we did a lot of data integrations and Snowflake became kind of like that cloud go to for Salesforce customers and so fell in love with Snowflake for lack of a better way to phrase it.

And then as I just got more involved with the platform and the technology and all the great things they were doing, I decided to just become an author for the platform. And so my first book, Mastering the Snowflake SQL API was really born from that juxtaposition between being an engineer but also being a data person and being able to build applications that could have a number of ways of integrating into Snowflake.

And having that engineering background DevOps was really important. I've been a customer and an implementer of DataOps live a number of times.

It just made sense that that next iteration of my journey as an author was to now focus on how Data Ops and Snowflake, taking those DevOps principles and how you can flip them to supporting data instead of engineering and development.

[00:02:20] Speaker A: Yeah, and I can definitely appreciate your take on the love for Snowflake. I've been in this business for a little bit longer for close to 30 years and when I was first introduced to Snowflake, I kind of had that like, oh, where have you been my whole my career. Because it finally felt like a solution that fit with the data warehouse practitioner really needed and the problems we felt with your new book, mastering snowflake, DataOps with DataOps live an end to end Guide to the Modern Data Management. Which perfect timing with today is that it's actually, actually being released, what, in two days? On the, on the 21st?

[00:02:55] Speaker B: Yes, on the 21st. So you can pre order it on Amazon now. They've got a special pre order discount that you can take advantage of, but. And then, you know, we've done all the negotiations so you should be able to get two day shipping for all you prime members out there.

And we're really excited to, you know, hear what people think about the book.

[00:03:15] Speaker A: Yeah. And you briefly touched upon it in the intro, but I want to kind of come back to it. What inspired you to for like, hey, there's a gap in the industry there. There's like, I need to write this book, tell a little bit about the background, how it came to you writing this book.

[00:03:31] Speaker B: Yeah. So again, when I was in consulting and we were doing Salesforce consulting and data, one of the big things that we did was took DevOps and flipped that into Salesforce DevOps and we built a tool from the ground up. I'd had experience building, you know, DevOps type tools when I was with IB and then I ended up transitioning over to a company from consulting that did nothing but DevOps for Salesforce. And just seeing how DevOps in the engineering world and DevOps in the Salesforce world just really built trust in the development process.

I started diving deeper into how can those same principles be applied to build trust in your data process, your data pipelines, your data architecture, your data governance.

And through that journey, I discovered the DataOps Live platform. Fell in love with it.

This is not a hard sell. This is just me being passionate about something.

But there's just so much out there. DataOps is new.

People understand DevOps, but they don't understand how DataOps fits into that whole development life cycle. And it is different.

And there's some blog posts and people doing some great things, but there's just not really that comprehensive. Go to what is Data Ops and okay, now I understand Data Ops. Where do I start?

[00:04:56] Speaker A: Right, right. And you know, I can put a little bit of my own experience into this. So, you know, my first journey into Snowflake, I was a senior architect at a Fortune 100 insurance company. And we identified, I'm going to say we called it Core Services because, you know, I was a data guy, I wasn't a DevOps person.

And we said, hey, how are we going to deploy our stuff? How are we going to do all of our infrastructure as code? All these things became requirements.

We As a team spent at least, I want to say three months hacking together something good enough to get by then. Same with me. A few years later when I was consulting, I was introduced to Data Ops Live and I was like, oh wow, this was fantastic. I can definitely appreciate because I have been there and a lot of data organizations like myself, we're data people, not DevOps people. Right. And those, those practices are foreign to a lot of data teams.

[00:05:59] Speaker B: Yeah, definitely.

[00:06:01] Speaker A: So in the forward you had it mentions in the book that a panoramic overview in a microscopic examination. How do you balance strategy and the tech and deep technical content?

[00:06:17] Speaker B: Definitely, definitely a challenge for sure because especially, you know, someone that likes to be hands on, you can really nerd out on this kind of stuff. But then you, you know, I wanted to make sure that the book was a great fit for people that aren't hands on, but are maybe decision makers, you know, thinking like your head of data or your chief data officer, Chief Technology officer.

How can they pick up this book and see the value of what they could bring to their team and to their organization while at the same time catching those people that are boots on the ground? And so in this book what I did is I kind of broke it into parts. And so your first part is very much talking about just Data Ops as a concept.

Your second part is talking about the core concepts and technologies of Data Ops. So DBT and git and all of those things.

Then in the final part, that's where we dive into the DataOps Live platform and how a single platform can cohesively bring all of this together and really alleviate a lot of the pain points of the build it yourself approach.

[00:07:28] Speaker A: Yeah.

You talked about the earlier in the chapters and what your focus is on. One thing I love that was in there was Data Ops as that bridge between data engineering and, and operations. Right. And then also you kind of see that evolving in the Snowflake ecosystem as well. Right. What's your take there?

[00:07:51] Speaker B: We do. So I mean if you dive into DDOps, it's definitely code based, but then it's also data related as well. We're not just moving code from lower environments to upper environments, we're moving data from lower upper environments and we're testing every step of the way.

It's continuous, it's always repeatable.

And what we're seeing in the Snowflake world is their introduction of things like integrated dbt, Snowflake Horizon, we've got streams for pipelines within Snowflake, we've got streamlit apps. Now so there's just so much that Snowflake itself is bringing into their world that kind of bridges those gaps, that having this tool that doesn't necessarily replace that, but can sit on top and enhance it.

That's kind of what we were talking about when we're talking about bridging that engineering and operations gap. Because Snowflake is so much more than just data. It's your data catalog. I mean, it can serve so many things in one ecosystem.

[00:08:55] Speaker A: Yeah. And even why I think they moved away from being the data warehouse platform right now they consider themselves the AI data cloud. You know, it's interesting you. You talk about, you know, the amount of parts and pieces.

I think a couple weeks ago or last week, I don't remember now, from a time perspective with Snowflake Build and I unofficially counted between new capabilities and enhancements to existing capabilities, around 40 new things that were discussed, talked about at Build and to your point was how do you. How does an organization, you know, manage all of that within. Within Snowflake? Like you said, with trust and everything like that? It's crazy, right?

[00:09:38] Speaker B: Yeah. Snowflake is constantly, I mean, you know, constantly rolling out new things. And, you know, as an author, that's one thing that I struggle with is writing in such a way that by the time you get through writing and editing and the publishing process and all that stuff, that what you're putting on the shelf is, you know, it's still relevant.

[00:09:58] Speaker A: Yeah, exactly. So one of the important aspects of. I know there's many parts to data ops, but one of the, I call it the foundational aspect. Right. Which is like cicd and automation and collaboration for organizations.

Where do you see many organizations struggling in that space?

[00:10:20] Speaker B: I mean, the struggle itself, I think, is with understanding what DataOps is and why it's important as far as, like that struggle to start taking that first step, it literally comes down to execution, the first step into anything new. I mean, it's a fundamental shift in your paradigm and way of thinking. That first shift is scary. And I think a lot of companies strive to get to perfection before they layer CI cdm. They want the perfect architecture, the perfect data. And what they don't realize is that CI CD is what helps you get to that perfection. So if you. It doesn't matter where you are in your journey, it never hurts to just take that first step off the cliff, get CI CD in place, and then watch as that ideal data product, data environment, whatever you're building, watch as it just accelerates towards that goal of what you see is like the golden standard.

[00:11:22] Speaker A: Yeah. It's interesting because I can remember being in scenarios where it's like, Keith, just get it to production, just get it into production.

But then after that would be the speed I'm going to say that you would need to be able to iterate and get it back into production. As you have to make changes and new requirements come in that before you know it, it's hard to go back. Right. And implement it. And then you're relying on, you know, Bob in the corner hitting the button every, every time you're trying to get it. And in today's day, you can't just release once.

You need to be releasing almost hourly, at least daily at the rate we are going today.

[00:11:59] Speaker B: Yeah. And I would say that companies, honestly, companies that are more focused right now on improving their architecture, improving the quality of their data, improving the trust, they're doing a lot of development and a lot of change. So that's a great time to bring in cicd. Whereas companies that feel like they've reached that perfected state, that's when you give it a little more thought to you walk through it, because you don't want to break any of that perfection.

And I think a tool like DataOps Live, where a lot of that stuff can be a lift and shift into the process without having to go back and do a lot of manual stuff. I think that's what makes a tool like this so helpful because at any stage in your journey, it's almost literally plug and play.

[00:12:47] Speaker A: Yeah, that's a great transition into. To my next question, because I've been in organizations for you who many companies.

DevOps is not a new.

Anything new. Their, their development, their application people. That's pretty well been ingrained for a long time and I found that. Well, why don't you use, don't. Why don't you just use these DevOps solutions? Why don't you just, you know, adopt these. What does data Ops live bring to the table that other DevOps solutions that are out there don't and why people would want to use that instead of other things that many application developers have been using for years?

[00:13:25] Speaker B: Yeah, we talk about that in the book. It's very important to distinguish that while DevOps and DataOps share a lot of the same underlying principles, the paradigm is completely different.

In DevOps you're moving code, but in data you're moving code and moving data.

The process is just so different.

I think that if you try to use traditional DevOps tools, you're trying to Square peg, ground hole, the whole solution, it doesn't make a lot of sense. Now, there are solutions that are either DevOps in root that have been flipped to support DataOps and things like that.

But again, what you get with the DataOps Live platform that I really like is you get the cohesiveness. Everything is in one tool. A lot of it's out of the box. I've definitely built DataOps processes that I have to manually script GitHub Actions and I find myself reinventing the wheel.

And there's just so much that a unified platform can bring to the table in terms of time, money, effort. I mean, all the things that a lot of companies don't really pay attention to, but they should because your bottom line isn't just impacted by how much money you make, it's also impacted by how much money you can save.

[00:14:48] Speaker A: Yeah. And into my next question, or maybe this is going to be more, more of a statement and from my experience is that your business consumer does not want to hear that.

Well, it's going to take me a couple days to deploy this or release this into production. Right. Is, you know, that's really where, you know, the features that it brings like automation, real time monitoring and integration really come into play. Right?

Yeah.

[00:15:14] Speaker B: And you know, one of the things that, you know, we always talk about when we talk about DataOps is not. It's not just the trust that you bring to your data and your infrastructure, it's also the turnaround time.

One of the key components I hit on when people are like, why do we need some data ops solution in our environment?

My number one go to is that time to completion, that time to production, it drops dramatically. Would you rather spend three weeks manually testing and doing all this stuff and then hope that what you did in test works exactly the same way in production, or would you rather spend a couple days doing all of this? The testing is continuous while you're going and then when you're ready to production, you click a button and if it worked in test, it's working 100% the same way in production.

[00:16:00] Speaker A: Yeah. And one of the things I also like is that, you know, many organizations are working on kind of like disparate agile teams maybe in different domains and being able to sit here and say, I know that Team A and Team Z, that testing was done the same way by critical tests were done the same way. The whole federated governance aspect of it.

[00:16:22] Speaker B: Right, exactly.

[00:16:25] Speaker A: So for the reader, what are some aha. Moments that they might experience when, when they realize that data was alive can automate a lot of the stuff we're talking about.

[00:16:36] Speaker B: Yeah. So I think that in my experience, when I talk to people about Data Ops Live as a platform, one of the things they focus in on is, why don't I just go get DBT Core? Or why don't I just go get DBT Cloud? And so I have to pull them back and be like, yes, DBT is very fundamental to Data Ops and it's a great tool and it's baked into the platform, but the platform itself brings so much more. For me, like, the sole engine gives you infrastructure as a code. DBT just doesn't really support infrastructure as a code. Being able to manage your databases and your schemas and, and then dive into the DBT piece and manager models, access and control, RBAC policies, all of that can be managed. And being able to quickly adjust roles, quickly adjust users, roll that kind of stuff out and just know that it's working the way that you intended.

That's amazing. And then because everything is in YAML governance right now, we're seeing, especially with Horizon and Snowflake, we're seeing the rise of data catalogs. Data World just got bought and now they're merging into a larger organization.

As we see data catalogs coming on the rise, your data catalog is only as good as the metadata associated with Snowflake. I think that a tool like DataOps Live and the Sol engine and those types of things, those really help roll out metadata at the same time that you're rolling out everything else. It's not an afterthought.

[00:18:08] Speaker A: Yeah. And to your point, since the Snowflake growth and the amount of stuff in Snowflake that you want to manage, just for example, this, this past week, Snowflake launched Snowflake Intelligence. Right. And we were a launch partner with Snowflake Intelligence. And, you know, I was actually having a conversation with people at Snowflake recently and, you know, that's more stuff for people to organize. How do you manage the semantics? How do you manage the agents? They need to be tested, they need to be deployed, they need to be just as much as a table or a dynamic table or a. And so the amount of capabilities that you need to manage as code in Snowflake is exponential at this point, and it's even growing even more.

[00:18:48] Speaker B: It really is. Yes. And so I just can't harp enough on having a unified place where you can do all of that and you can see the entire ecosystem together.

It's just great.

Again, going back, I've definitely done this type of solution outside of the DataOps Live platform and having to jump between DBT Cloud and then jump into GitHub and then jump into Airflow. You're jumping all over the place and trying to keep the big picture in your head and it makes it so much harder whenever you're doing that.

[00:19:23] Speaker A: Absolutely. And then soon we got to get you introduced to Metis, our AI data engineering helper. So to even help you, you know, even accelerate a lot of those things with inside data slot Live.

So let's kind of talk about what's really hot in the industry today, which is AI. Right. And a lot of focus, especially on ourselves, is this what we're calling AI ready data. Right. So in your, in your first chapter, you know, you talked about data Ops principles to AI and ML pipelines. How do you see ddops enabling AI ready data?

[00:20:02] Speaker B: The biggest thing for AI ready data that a lot of companies don't understand is not volume of data, it's clean, trustworthy data.

And when you implement something like cicd, you have that trust that your data is clean. You know where it's at, you know where it's going.

I think one of one of my favorite features in DataOps Live, honestly, and it's maybe coming from my whole DevOps, my DevOps background, is the zero copy clone capabilities that I can branch get and spin up a spot sandbox that's all mine and I can do whatever I want in it. I can test it, I can send it off to whoever's running merges and tell them that it's ready to be merged. They can merge it to dev, we can let it run for a few hours, run for a day. We can merge it to QA or uat, let our users go in, make sure that nothing broke from a user perspective and then push it on up to production. And every step of the way I know that what I've built is trustworthy. I have trust in the process.

And if you don't trust your data, you're not going to have good AI ready data.

[00:21:12] Speaker A: Yeah. I'm going to second your perspective on zero copy clone. I think it is the most under appreciated capability in Snowflake. The first time I saw it I thought I'm like, this can't be true, right? And we would did. I did everything I could to try to like break it and it just, I'm like, this is, this is, you know, black magic, you know, I don't know how they're doing this, but it is amazing. Amazing. And you Know, I think you kind of touched upon it, you know, but if you could summarize, you know, the one thing, many organizations are going into that AI world and they're running down as fast as they can. But if you could say, if anything to prepare themselves and prepare their data for AI, what would you give them for kind of like a, your opinion on really what they need to focus on.

[00:22:01] Speaker B: Trust. I mean, I, everything I do in the data world, I follow the 8020 rule, except when it comes to AI, and then I follow the 9010 rule.

But I always put in a little bit of skepticism, a healthy amount that says, listen, I can't tell you how many times I've preached that your data will never be 100%. If you're striving for 100%, you're just never going to get there. It's just data changes too much. You've got the human component, the data that's coming in. You never know what someone's putting into a field. You never know if developers are putting in field checking or quality checks at that layer. You're never going to be 100%. If you can get 90 to 95% trust in your data, I think you're AI ready. And I think that's probably the most important thing is just having that trust.

[00:22:51] Speaker A: Yeah. If you're consumer, which, you know, in the end, I always, I heard this from, I don't, I don't remember who exactly was, but it always stuck.

I don't know if it was Bill Inman or if it was Dan Linstead. It was one of them. But, you know, we do what we do because of our business. Right. If there, if it wasn't for our business customers, we wouldn't have a reason to be here. And so to your point, if they can't trust, if your customers can't trust what you're doing and what you're giving them for data, then you're not really doing your job effectively.

[00:23:27] Speaker B: I mean, like, can you imagine going to the CEO and reporting sales numbers that are just that are completely inaccurate because you know something was wrong. Like the pipeline data was wrong. Like your sales pipeline data was not fully up to date. And you know, now, now you've got a VP of sales setting there trying to explain why an Excel spreadsheet that the CEO is still running on the side doesn't match up to his pretty graphs and reports.

And you know, where does that fall? He doesn't want to take the fall. So he's coming back to the deity and Being like, what did you guys do?

[00:23:57] Speaker A: Yep. Oh, I have some horror stories. You know, speaking of the Excel, I saw a horror. I don't horror story because somebody didn't get the precision setting on an Excel spreadsheet set properly and the numbers were wrong. But we could probably do a whole nother episode on horror stories.

So kind of coming, bringing it back to the book, you know, what do you. What was the biggest challenge you faced? You know, when, when writing the book.

[00:24:24] Speaker B: Making it a dual source of knowledge? I would say I really, really wanted to convey what DataOps is, because whether you go with DataOps live or not, whether you trust what I'm saying, whether you trust what you're saying, it doesn't matter. At the end of the day, Data Ops is still important in some way, shape, form or fashion. How can the book reach that audience to convey that? But then also, how can we showcase a tool that can bring all of these concepts and processes together?

I think that was the biggest challenge because so many times I wanted to focus way more on the technology and again, putting on my nerd hat, that's where I really wanted to put all the focus at. And I had to pull back and be like, no, you need to focus on the methodology as well. You need to, you know, the true data pillars, you know, that we talk about, all of those things need to be conveyed. And so it was that balance of not being too technical or just not making this a book that was just like trying to sell a platform and instead making this a book that was educating the audience.

[00:25:31] Speaker A: Yeah. And to your point, the importance of Data Ops as a practice is definitely growing when you've got organizations like Gartner and even ISG and they have categories now around Data Ops and even Gartner saying, you know, how importance of Data Ops as a practice. Right. Needs to be within organizations and organizations that are not adopting Data Ops practices and their percentage of failure because they don't implement these practices. So I can definitely appreciate what you said there.

[00:25:59] Speaker B: Yeah.

[00:26:00] Speaker A: So if you could take one takeaway and the hope what your readers could walk away with when they finish reading the book, which I know it's probably not a book where you read, you know, from chapter one to chapter two, but you should.

[00:26:13] Speaker B: I've laid it out that way. I've laid it out that way.

[00:26:15] Speaker A: You should.

What do you hope they take away from that when they walk away?

[00:26:21] Speaker B: That they need Data Ops in some way, shape, form or fashion? Yes. The second half of the book focuses on the Data Ops Live platform.

I would be remiss not to talk about a tool that can bring a lot of this together, but at the end of the day, data ops is something I feel is going to be critical as data and AI and machine learning involve. We're already seeing ML Ops as an example. And how can you have good ML Ops if you don't have good data ops? So that's what I hope they take away. I mean, again, great tool, but if you don't use the tool, at least use the methodology, the processes that are there. And that's what I want people to really understand. And so that's why the book is laid out the way that it is.

[00:27:02] Speaker A: Yeah. So I know we've been flashing up on the screen for those of you who are even listening and watching us on the book. But, you know, where are other places people can learn more about you and your book and your other books as well?

[00:27:16] Speaker B: Yeah. So the Amazon link that keeps coming up, you can click the little author link on there and it'll take you to my other book. And I've got another book that's in the works, so it'll be there eventually.

You can find me on LinkedIn. I think those are probably the two biggest. I do some blogging on medium.com so you can go catch some of my blog posts there. But I think that LinkedIn and the author page on Amazon are probably the two best places.

[00:27:46] Speaker A: Yeah. And for those of you who are watching this right now, we will be adding or the link should be in the comments below for where you can reach and get the books yourself or follow Ronnie on LinkedIn and another. And on Medium. So, Ronnie, I want to take the the time to thank you for joining me, congratulate you on the new book, hopefully in a couple days. The launch goes very well and I think the DataOps community in general appreciates the resource that you've provided to us.

[00:28:19] Speaker B: Thank you. And thank you for having me here, having me on the show. I am really excited about this resource.

Like I said, already thinking through what does that next iteration look like.

DataOps Live is doing so many great things. I already feel that I'm going to have to probably write a part two to this book just to keep up with what you guys are rolling out.

[00:28:40] Speaker A: We're just, we're just looking out for you. Right. How can we keep you busy? So, you know, we got to keep up with. You know, it's funny you say that is just keeping up with Snowflake. Right. From, from our perspective, it's like you know, the industry as a whole is just, is accelerating so fast. It's getting to the point it's hard to keep up. But again, thank you for joining me.

Well, everybody. So next time on our next episode, we will continue our journey toward AI ready data. We'll feature more practitioners and thought leaders in the industry who are making data ops real inside, real inside their organizations. Until next time.